Oracle Analytics Cloud (OAC) offers a lot of Ad-Hoc functionality for Users and developers where one can explore and analyze their data. One such feature is “Data Flows” which can be basically used to build Ad-Hoc ETLs. In these data flows, you can perform joins between multiple datasets, perform calculations / aggregations etc., to get the target table populated according to your requirement which is basically needed for further analysis / decision making.

In this article, we will walk you through the process for creating Ad-Hoc ETL and setup of incremental loads in Oracle Analytics Cloud (OAC)

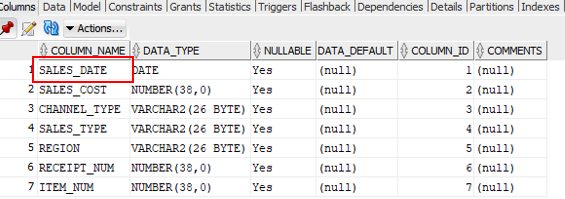

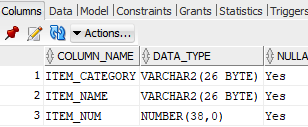

- Identify the source tables that you need to add to the Data Flow. In the following example, we are using SRC_SALES table which contains sales data and SRC_ITEM table which contains Item information. We are using SALES_DATE column in SRC_SALES to identify incremental records.

- Create two connections, one for Source database schema and another for Target database Schema

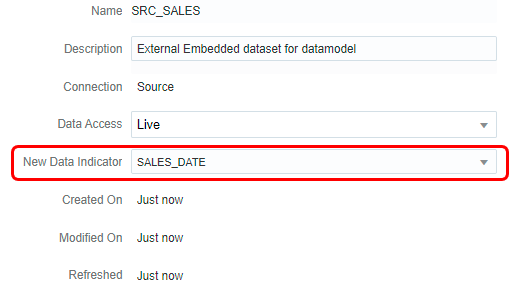

- Create a dataset based on source database schema and define a “New data identifier” and save the dataset.

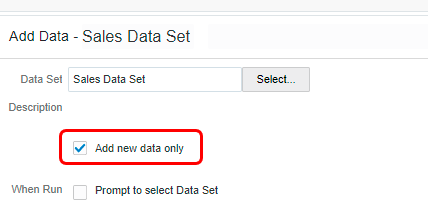

- Create a Data Flow based on source data set. Check the “Add new data only” flag. This appears only when we define a “New data identifier”. This should be active for incremental load process.

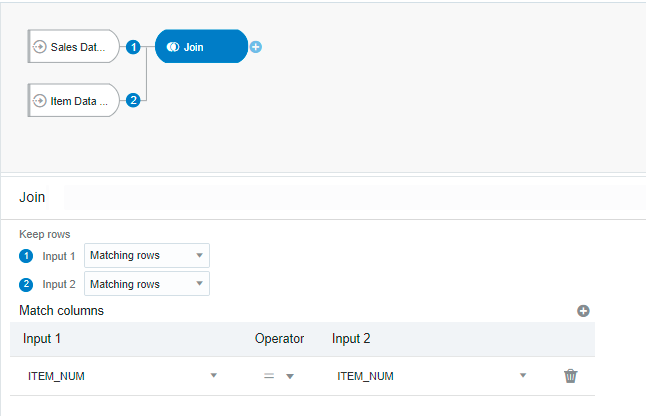

- Add another data set (Item) and join with Sales data set based on Item Number

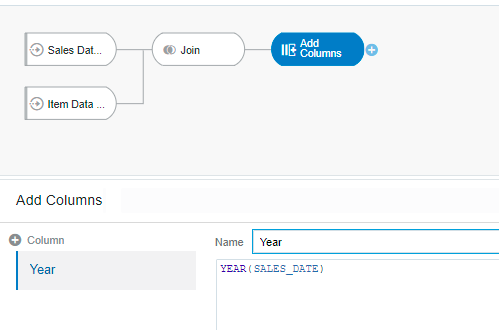

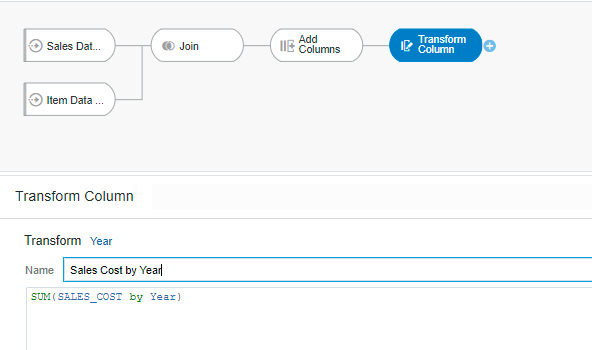

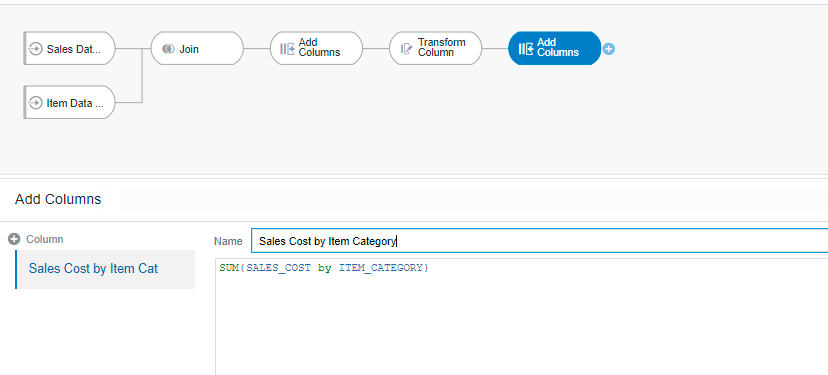

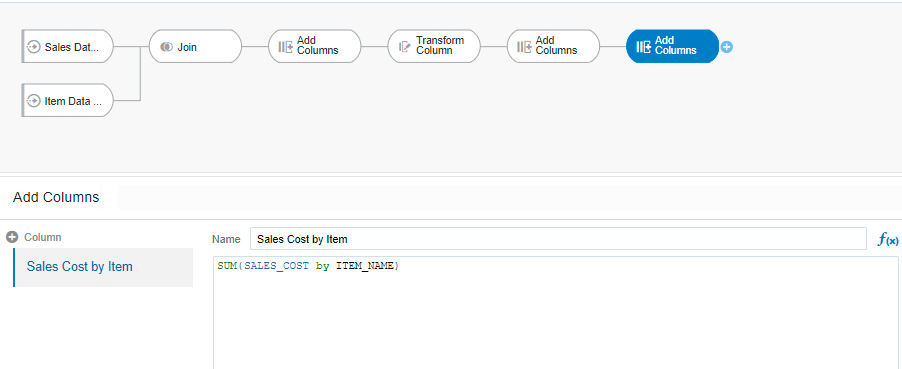

- Additional columns can also be created. In this example, we’ve added multiple columns just to show the functionality. You can use this feature and create many more measures as per the business requirement.

- Click on Validate and Apply

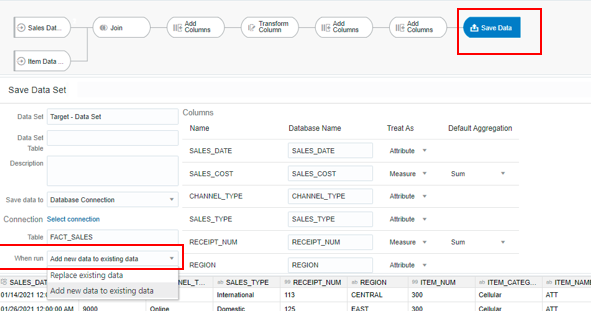

- Save the output dataset to target connection. If you select “Replace existing data” it will truncate the target table and reload all the records. With “Add new data to existing data” option, it will keep the existing records and loads only the new records in the source table. New records are identified by the column we defined in the source dataset.

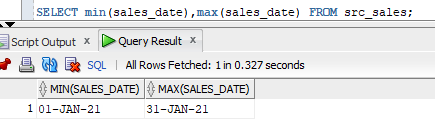

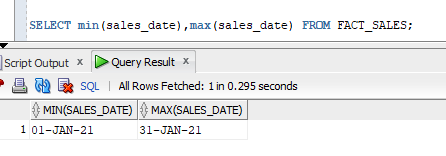

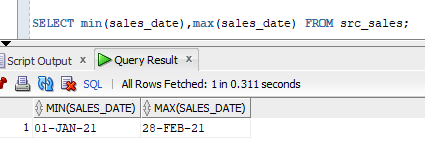

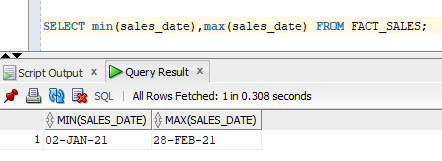

- The data flow is now ready to run. The initial run loaded all the data from source where we have data for Jan 2021. Before running the flow again, we inserted data for Feb 2021 and only the Feb 2021 data was picked up for next load. See below screenshots for checking the same.

- After adding the Feb 2021 data and rerunning the data flow.

This way, Data Flows can be created to run incremental loads, create new columns, build Aggregate tables and so on. This is a very handy feature to quickly build Ad-Hoc ETLs and load data into a target table for further analysis and decision making within Oracle Analytics Cloud (OAC).

If you need any help with your Oracle Analytics Cloud Implementations, Please drop a line here. Our Oracle Analytics Cloud experts will contact you promptly.